Week #19

04 Mar 2019Learning rate tuning

Tuning the learning rate in order to find the suitable learning rate strategy for slice 5.

I tried three strategy of learning rate using torch.optim.lr_scheduler.StepLR(optimizer, step_size, gamma=0.1, last_epoch=-1) in Pytorch, where the gamma represent the factor of learning rate decay, step_size indicates the Period of learning rate decay.

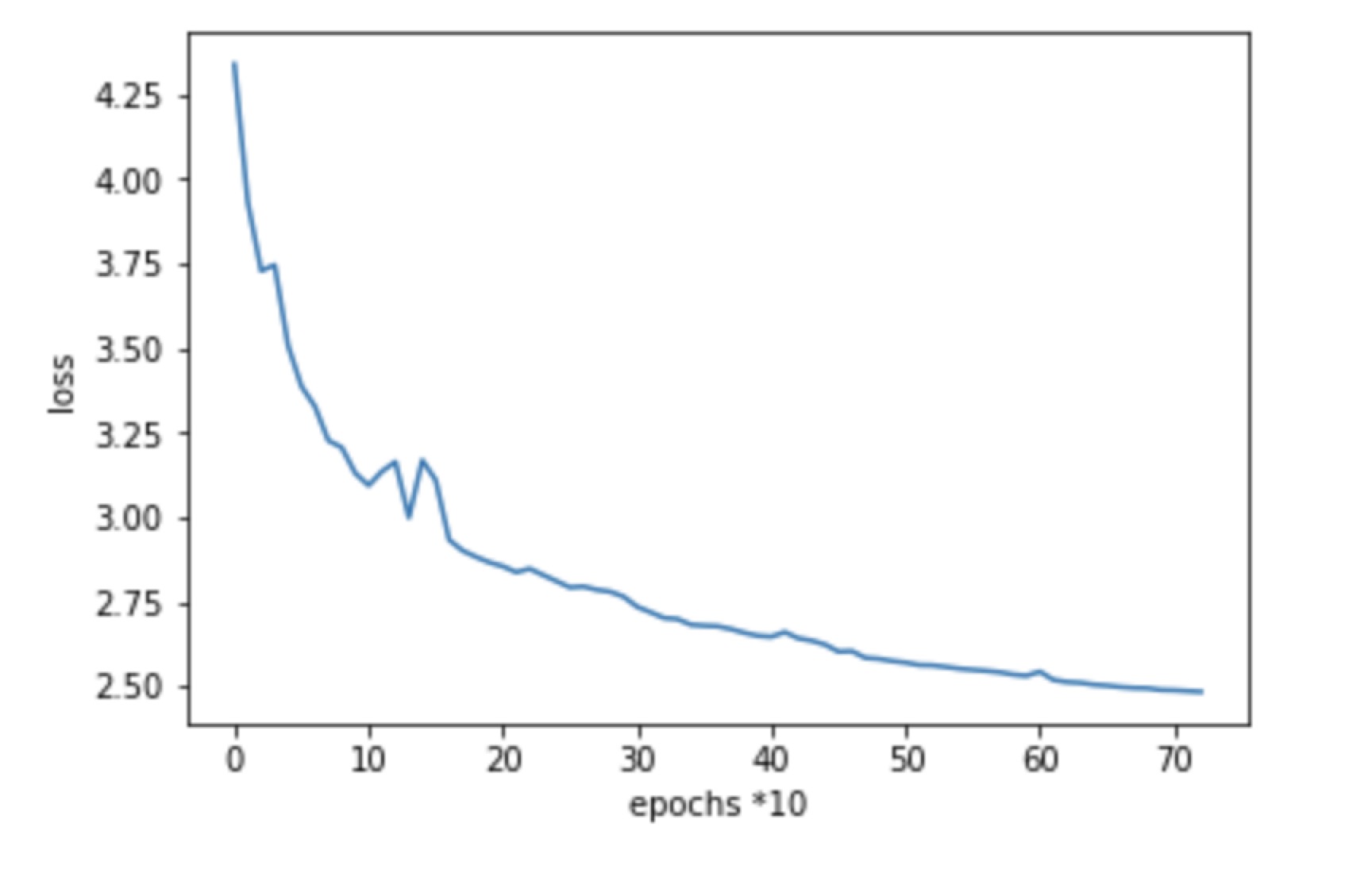

- learning_rate’s initial value: 0.002, gamma: 0.5, step_size: 150

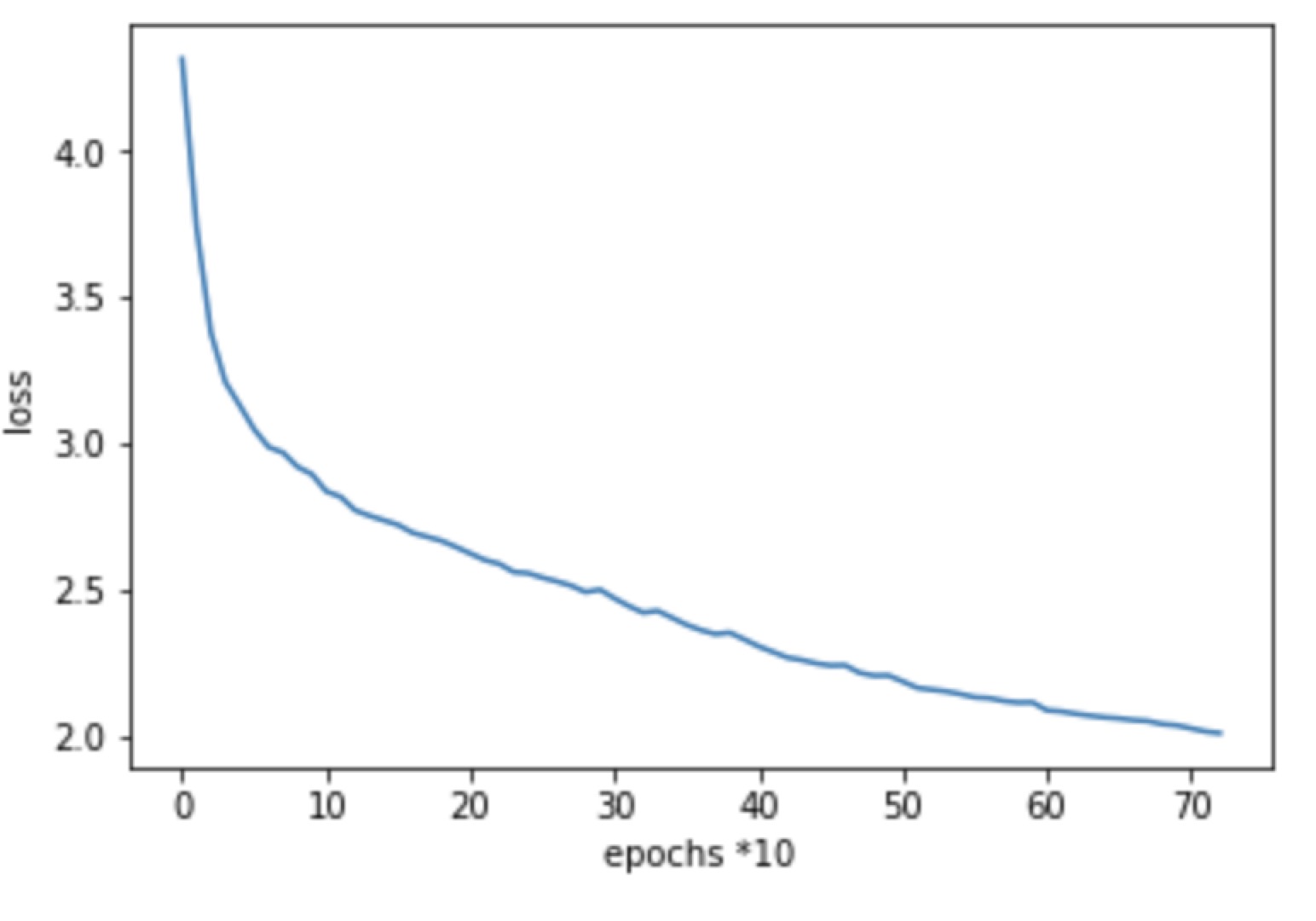

- learning_rate’s initial value: 0.001, gamma: 0.8, step_size: 100

- no scheduler, learning_rate: 0.001

- epoch 0-150, lr=0.002

- epoch 150-300, lr=0.001

- epoch 300-450: lr = 0.0005

- epoch 450-600: lr = 0.00025

- epoch 600-750: lr = 0.000125

- epoch 0-100: lr = 0.001,

- epoch 100-200: lr = 0.0008

- epoch 200-300: lr = 0.00064

- epoch 300-400: lr = 0.000512

- epoch 400-500: lr = 0.0004096

- epoch 500-600: lr = 0.00032768

- lr = 0.001

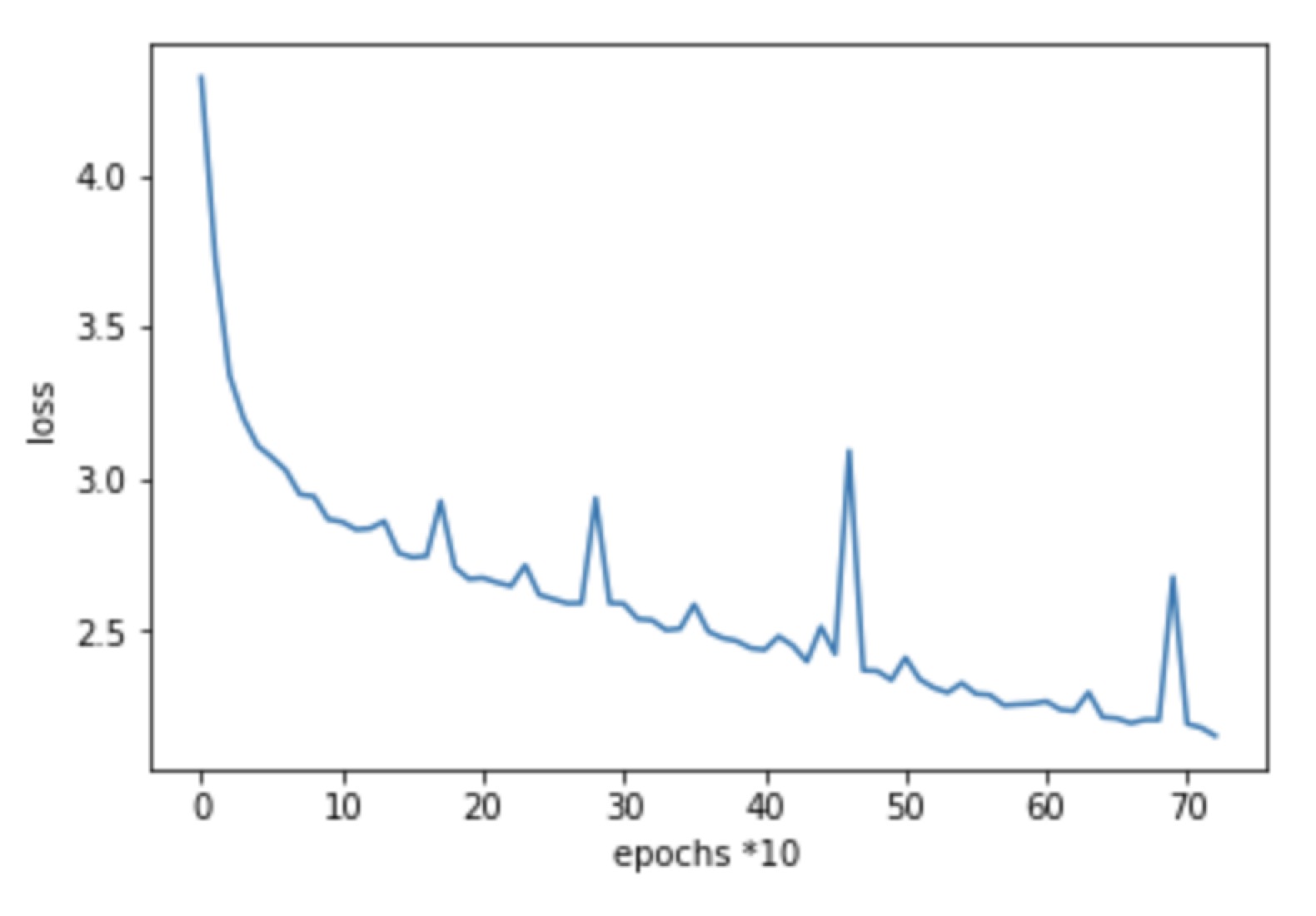

Since observing that the loss doesn’t decrease efficiently after 300 epochs, another strategy is used by torch.optim.lr_scheduler.MultiStepLR(optimizer, milestones, gamma=0.1, last_epoch=-1), whiche means for certain periods of epochs, the learning rate stays constant.

- learning_rate’s initial value: 0.001, gamma = 0.1, milestones=[200,400], which means

- lr = 0.001 if epoch < 200

- lr = 0.0001 if 200 < epoch < 400

- lr = 0.00001 if epoch > 400

Another approach

In DeepNAT[1], they separates foreground from background before the segmentation, in our case, the background represent a very large amount of pixels, as a result, it’s necessary to first remove the background. There is a simple way implemented by QuickNAT in Github, it simply add up all the pixels whose class frequency is less than a threshold. The same strategy will be used.

References

[1]C. Wachinger, M. Reuter, and T. Klein, “DeepNAT: Deep Convolutional Neural Network for Segmenting Neuroanatomy,” NeuroImage, vol. 170, pp. 434–445, Apr. 2018.